Detox: An AI system to detect and prevent toxic speech

Cyberbullying is everyone’s business and the best response is a proactive one. Our new research project Detox is a step forward in figuring out how to help children and parents navigate today’s complex digital universe.

Bullying of various forms has long been a difficult part of the social, community, and school life of children. With the advance of digital technologies and the wide use of smartphones by children and young teens, cyberbullying and kids online safety have gradually emerged as an alarming source of concern. For example, the latest poll released by UNICEF shows that one in three young people in 30 countries said they have been a victim of online bullying, with one in five reporting having skipped school due to cyberbullying and violence.

Both bullying and cyberbullying violate children's integrity, dignity, and self-respect. Both can lead to diminished confidence, loss of self-esteem, and many long-term adverse effects on our children and, in the most extreme cases, suicide. In contrast to traditional bullying's physical abuse and face-to-face verbal attacks, however, cyberbullying takes place on social media, forums, messaging platforms, gaming platforms, and mobile phones. We must recognize that cyberbullying has no physical constraints. In cyberspace, vicious acts can be executed from behind computer screens that anonymize a perpetrator who could be anyone from almost anywhere in the world. More terrifying is the speed and scale with which a bullying action can go viral and reach a vast audience in seconds. The traumatic experience of and the damage to children's mental health by cyberbullying is, therefore, very sadly, spontaneous, devastating, and long-lasting.

Parents and teachers are effectively the first lines of defense against cyberbullying. They can teach children that bullying in any form is never justified. Furthermore, they can show them how to act against hostile comments, messages, or cruel photos, how to block bullies, and how to report such content and seek help. Unfortunately, the trouble is that once children see toxic comments, they can't unsee them. To this end, social media companies have also taken a tough stance against cyberbullying by ramping up their content moderation, simplifying and expediting content report procedures, and enforcing stringent policies. These companies, however, are overwhelmed by the sheer volume of toxic content, and human curators simply can't cope with the load. After all, we are not surprised to see that, despite the enormous efforts from parents, education practitioners, platform providers and governments, cyberbullying remains an issue that is not sufficiently addressed.

Detecting cyberbullying online presents a challenge. It is notoriously difficult to detect such content, because people use offensive language for all sorts of reasons. Simply focusing on spotting keywords, not to mention ever-growing Internet slang words, is not an ideal solution. Besides, some of the meanest comments do not use words that are overtly or categorically offensive. Plus, we are dealing with a finding-a-needle-in-a-haystack problem since only a tiny portion of the Internet content is toxic.

To address this challenge head-on, we at NortonLifeLock Labs leveraged the latest AI technology to develop our newest research endeavor — the Detox neural engine to automatically detect harmful content at scale with high accuracy.

The Detox core consists of an intelligent system trained to spot toxic content by recognizing language nuances. In essence, our approach mimics the training process to onboard a junior human content moderator to spot cyberbullying content. During training, we would show these trainees different examples of toxic comments, explain the words/slang, and illustrate the implicit meaning when words are combined together.

In our case, we take a similar training approach in order to coach a digital AI moderator. To this end, we built a cutting-edge attention-based Bidirectional neural engine. This sequential model consists of two layers. The first layer takes the input sentence in a forward direction and the second layer in a backward direction. In this setup, we effectively increase the amount of information and context available to the model (e.g. knowing what words immediately follow and precede a word in a sentence). We also incorporate an attention mechanism to enable our neural engine to selectively focus on information most relevant to toxic content. This way, we can train the Detox neural engine to understand both the contextual information of the words in a sentence and the pertinent patterns behind a toxic comment.

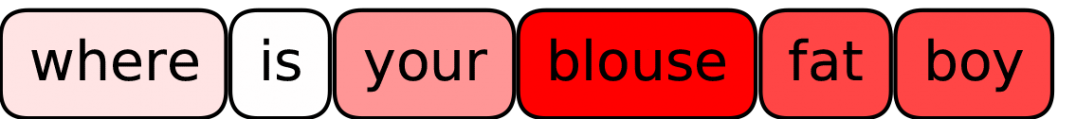

Once trained and fine-tuned, the AI-powered Detox engine would be designed to pinpoint less obvious bullying patterns. For example, Detox can recognize the implicit yet toxic implication between "blouse" and "boy", which may be manipulated to attack a child based on their sexual orientation. Deployed in the right application, the system would then proactively block this toxic content and stop the bullying before it reaches the victim. At the same time, a system could be designed to alert parents in instances of toxic content recognition. Later, parents can take action to protect their children’s experiences online. In the examples below, we illustrate the detection capability of Detox. Please note that, due to the nature of toxic comments, the examples contain text that may be considered profane, vulgar, or offensive.

Apart from blocking harmful content, it is crucial to realize that reading negative content can be as poisonous. Just picture a kid obsessively reading reckless comments from his peers. Such content is not strictly bullying but inevitably leads to negative thoughts and feelings, which can further cause isolation, depression, and mood changes. For this reason, the Detox engine could also allow us to track the overall sentiment of the content browsed by children. This way, parents can have useful insights about how websites and social networks affect their children. In turn, parents can limit the negative impact on children's mental health by proactively engaging in meaningful conversations with them.

Cyberbullying is everyone’s business and the best response is a proactive one. Our new research project Detox promotes cyber safety for kids and is a step forward to help the children and parents to navigate today’s complex digital universe. Stay tuned for more updates on Detox!

Editorial note: Our articles provide educational information for you. Our offerings may not cover or protect against every type of crime, fraud, or threat we write about. Our goal is to increase awareness about Cyber Safety. Please review complete Terms during enrollment or setup. Remember that no one can prevent all identity theft or cybercrime, and that LifeLock does not monitor all transactions at all businesses. The Norton and LifeLock brands are part of Gen Digital Inc.

Want more?

Follow us for all the latest news, tips, and updates.